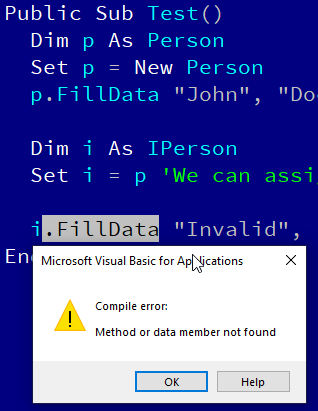

We’ve seen in UserForm1.Show what makes a Smart UI solution brittle, and how to separate the UI concerns from rest of the logic with the Model-View-Presenter (MVP) UI pattern. MVP works nicely with the MSForms library (UserForms in VBA), just like it does with its .NET Windows Forms successor. While the pattern does a good job of enhancing the testability of application logic, it also comes with its drawbacks: the View’s code-behind (that is, the code module “behind” the form designer) is still littered with noisy event handlers and boilerplate code, and the back-and-forth communication between the View and the Presenter feels somewhat clunky with events and event handlers.

Rubberduck’s UI elements are made with the Windows Presentation Foundation (WPF) UI framework, which completely redefines how everything about UI programming works, starting with the XML/markup-based (XAML) design, but the single most compelling element is just how awesome its data binding capabilities are.

We can leverage in VBA what makes Model-View-ViewModel (MVVM) awesome in C# without going nuts and writing a whole UI framework from scratch, but we’re still going to need a bit of an abstract infrastructure to work with. It took the will to do it and only costed a hair or two, but as far as I can tell this works perfectly fine, at least at the proof-of-concept stage.

This article is the first in a series that revolves around MVVM in VBA as I work (very much part-time) on the rubberduckdb content admin tool. There’s quite a bit of code to make this magic happen, so let’s kick this off with what it does and how to use it – subsequent articles will dive into how the MVVM infrastructure internals work. As usual the accompanying code can be found in the examples repository on GitHub (give it a star, and fork it, then make pull requests with your contributions during Hacktoberfest next month and you can get a t-shirt, stickers, and other free stuff, courtesy of Digital Ocean!).

Overview

The code in the examples repository isn’t the reason I wrote this: I mentioned in the previous post that I was working on an application to maintain the website content, and decided to explore the Model-View-ViewModel pattern for that one. Truth be told, MVVM is hands-down my favorite UI pattern, by far. This is simply the cleanest UI code I’ve ever written in VBA, and I love it!

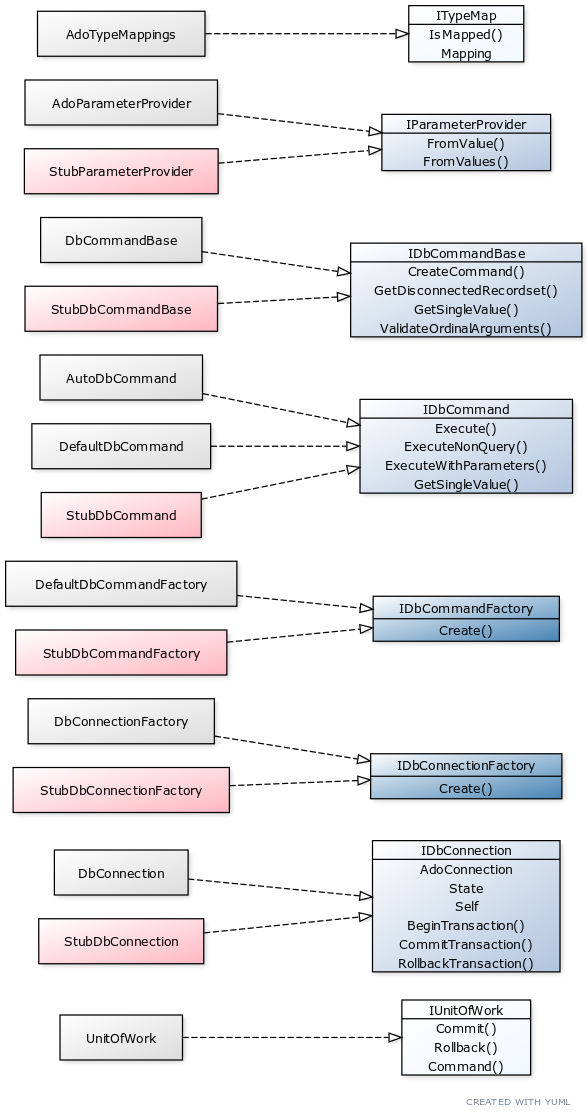

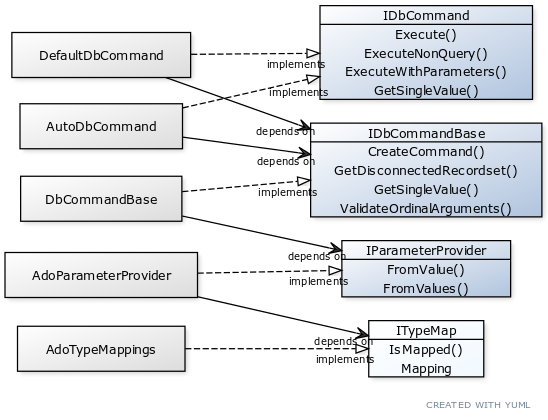

The result is an extremely decoupled, very extensible, completely testable architecture where every user action (“command”) is formally defined, can be programmatically simulated/tested with real, stubbed, or faked dependencies, and can be bound to multiple UI elements and programmatically executed as needed.

MVVM Quick Checklist

These would be the rules to follow as far a relationships go between the components of the MVVM pattern:

- View (i.e. the

UserForm) knows about the ViewModel, but not the Model; - ViewModel knows about commands, but nothing about a View;

- Exactly what the Model actually is/isn’t/should/shouldn’t be, is honestly not a debate I’m interested in – I’ll just call whatever set of classes is responsible for hydrating my ViewModel with data my “model” and sleep at night. What matters is that whatever you call the Model knows nothing of a View or ViewModel, it exists on its own.

Before we dive into bindings and the infrastructure code, we need to talk about the command pattern.

Commands

A command is an object that implements an ICommand interface that might look like this:

'@Folder MVVM.Infrastructure

'@ModuleDescription "An object that represents an executable command."

'@Interface

'@Exposed

Option Explicit

'@Description "Returns True if the command is enabled given the provided binding context (ViewModel)."

Public Function CanExecute(ByVal Context As Object) As Boolean

End Function

'@Description "Executes the command given the provided binding context (ViewModel)."

Public Sub Execute(ByVal Context As Object)

End Sub

'@Description "Gets a user-friendly description of the command."

Public Property Get Description() As String

End Property

In the case of a CommandBinding the Context parameter is always the DataContext / ViewModel (for now anyway), but manual invokes could supply other kinds of parameters. Not all implementations need to account for the ViewModel, a CanExecute function that simply returns True is often perfectly fine. The Description is used to set a tooltip on the target UI element of the command binding.

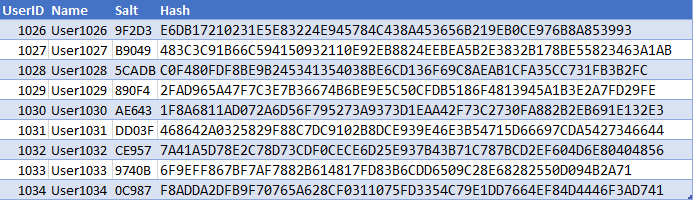

The implementation of a command can be very simple or very complex, depending on the needs. A command might have one or more dependencies, for example a ReloadCommand might want to be injected with some IDbContext object that exposes a SelectAllTheThings function and the implementation might pull them from a database, or make them up from hard-coded strings: the command has no business knowing where the data comes from and how it’s acquired.

Each command is its own class, and encapsulates the logic for enabling/disabling its associated control and executing the command. This leaves the UserForm module completely devoid of any logic that isn’t purely a presentation concern – although a lot can be achieved solely with property bindings and validation error formatters.

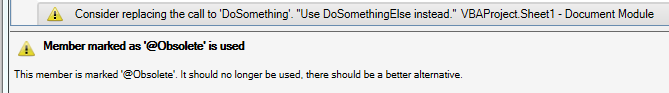

The infrastructure code comes with AcceptCommand and CancelCommand implementations, both useful to wire up [Ok], [Cancel], or [Close] dialog buttons.

AcceptCommand

The AcceptCommand can be used as-is for any View that can be closed with a command involving similar semantics. It is implemented as follows:

'@Exposed

'@Folder MVVM.Infrastructure.Commands

'@ModuleDescription "A command that closes (hides) a View."

'@PredeclaredId

Option Explicit

Implements ICommand

Private Type TState

View As IView

End Type

Private this As TState

'@Description "Creates a new instance of this command."

Public Function Create(ByVal View As IView) As ICommand

Dim result As AcceptCommand

Set result = New AcceptCommand

Set result.View = View

Set Create = result

End Function

Public Property Get View() As IView

Set View = this.View

End Property

Public Property Set View(ByVal RHS As IView)

GuardClauses.GuardDoubleInitialization this.View, TypeName(Me)

Set this.View = RHS

End Property

Private Function ICommand_CanExecute(ByVal Context As Object) As Boolean

Dim ViewModel As IViewModel

If TypeOf Context Is IViewModel Then

Set ViewModel = Context

If Not ViewModel.Validation Is Nothing Then

ICommand_CanExecute = ViewModel.Validation.IsValid

Exit Function

End If

End If

ICommand_CanExecute = True

End Function

Private Property Get ICommand_Description() As String

ICommand_Description = "Accept changes and close."

End Property

Private Sub ICommand_Execute(ByVal Context As Object)

this.View.Hide

End Sub

CancelCommand

This command is similar to the AcceptCommand in that it simply invokes a method in the View. This implementation could easily be enhanced by making the ViewModel track “dirty” (modified) state and prompting the user when they are about to discard unsaved changes.

'@Folder MVVM.Infrastructure.Commands

'@ModuleDescription "A command that closes (hides) a cancellable View in a cancelled state."

'@PredeclaredId

'@Exposed

Option Explicit

Implements ICommand

Private Type TState

View As ICancellable

End Type

Private this As TState

'@Description "Creates a new instance of this command."

Public Function Create(ByVal View As ICancellable) As ICommand

Dim result As CancelCommand

Set result = New CancelCommand

Set result.View = View

Set Create = result

End Function

Public Property Get View() As ICancellable

Set View = this.View

End Property

Public Property Set View(ByVal RHS As ICancellable)

GuardClauses.GuardDoubleInitialization this.View, TypeName(Me)

Set this.View = RHS

End Property

Private Function ICommand_CanExecute(ByVal Context As Object) As Boolean

ICommand_CanExecute = True

End Function

Private Property Get ICommand_Description() As String

ICommand_Description = "Cancel pending changes and close."

End Property

Private Sub ICommand_Execute(ByVal Context As Object)

this.View.OnCancel

End Sub

This gives us very good indications about how the pattern wants user actions to be implemented:

- Class can have a

@PredeclaredIdannotation and expose a factory method to property-inject any dependencies; here aIViewobject, but a customSaveChangesCommandwould likely get injected with someDbContextservice class. - All commands need a description; that description is user-facing as a tooltip on the binding target (usually a

CommandButton). CanExecutecan be as simple as an unconditionalICommand_CanExecute = True, or as complex as needed (it has access to the ViewModel context); keep in mind that this method can be invoked relatively often, and should perform well and return quickly.

It’s a simple interface with a simple purpose: attach a command to a button. The EvaluateCanExecute method invokes the command’s CanExecute function and accordingly enables or disables the Target control.

By implementing all UI commands as ICommand objects, we keep both the View and the ViewModel free of command logic and Click handlers. By adopting the command pattern, we give ourselves all the opportunities to achieve low coupling and high cohesion. That is, small and specialized modules that depend on abstractions that can be injected from the outside.

Property Bindings

In XAML we use a special string syntax (“markup extensions”) to bind the value of, say, a ViewModel property, to that of a UI element property:

<TextBox Text="{Binding SomeProperty, Mode=TwoWay, UpdateSourceTrigger=PropertyChanged}" />

As long as the ViewModel implements INotifyPropertyChanged and the property fires the PropertyChanged event when its value changes, WPF can automatically keep the UI in sync with the ViewModel and the ViewModel in sync with the UI. WPF data bindings are extremely flexible and can also bind to static and dynamic resources, or other UI elements, and they are actually slightly more complex than that, but this captures the essence.

Obviously MVVM with MSForms in VBA isn’t going to involve any kind of special string syntax, but the concept of a PropertyBinding can very much be encapsulated into an object (and XAML compiles down to objects and methods, too). At its core, a binding is a pretty simple thing: a source, a target, and a method to update them.

Technically nothing prevents binding a target to any object type (although with limitations, since non-user code won’t be implementing INotifyPropertyChanged), but for the sake of clarity:

- The binding Source is the ViewModel

- The SourcePropertyPath is the name of a property of the ViewModel

- The binding Target is the MSForms control

- The binding TargetProperty is the name of a property of the MSForms control

Note that the SourcePropertyPath resolves recursively and can be a property of a property…of a property – as long as the string ultimately resolves to a non-object member.

.BindPropertyPath ViewModel, "SourcePath", Me.PathBox, _

Validator:=New RequiredStringValidator, _

ErrorFormat:=AggregateErrorFormatter.Create(ViewModel, _

ValidationErrorFormatter.Create(Me.PathBox) _

.WithErrorBackgroundColor _

.WithErrorBorderColor, _

ValidationErrorFormatter.Create(Me.InvalidPathIcon) _

.WithTargetOnlyVisibleOnError("SourcePath"), _

ValidationErrorFormatter.Create(Me.ValidationMessage1) _

.WithTargetOnlyVisibleOnError("SourcePath"))

The IBindingManager.BindPropertyPath method is pretty flexible and accepts a number of optional parameters while implementing sensible defaults for common MSForms controls’ “default property binding”. For example, you don’t need to specify a TargetProperty when binding a ViewModel property to a MSForms.TextBox: it will automatically binds to the Text property, but will accept to bind any other property.

The optional arguments are especially useful for custom data validation, but some of them also control various knobs that determine what and how the binding updates.

| Value | Behavior |

|---|---|

| TwoWayBinding | Binding will update the source when the target changes, and will update the target when the source changes. |

| OneWayBinding | Binding will update the target when the source changes. |

| OneWayToSource | Binding will update the source when the target changes. |

| OneTimeBinding | Binding will only update the target once. |

| Value | Behavior |

|---|---|

| OnPropertyChanged | Binding will update when the bound property value changes. |

| OnKeyPress | Binding will update the source at each keypress. Only available for TextBox controls. Data validation may prevent the keypress from reaching the UI element. |

| OnExit | Binding will update the source just before target loses focus. Data validation may cancel the exit and leave the caret inside. This update source trigger is the most efficient since it only updates bindings when the user has finished providing a value. |

Property Paths

The binding manager is able to recursively resolve a member path, so if your ViewModel has a ThingSection property that is itself a ViewModel with its own bindings and commands, that itself has a Thing property, know that the binding path can legally be “ThingSection.Thing“, and as long as the Source is the ViewModel object where a ThingSection property exists, and that the ThingSection porperty yields an object that has a Thing property, then all is good and the binding works. If ThingSection were to be Nothing when the binding is updated, then the target is assigned with a default value depending on the type. For example if ThingSection.Thing was bound to some TextBox1 control and the ThingSection property of the ViewModel was Nothing, then the Text property would end up being an empty string – note that this default value may be illegal, depending on what data validation is in place.

Data Validation

Every property binding can attach any IValueValidator implementation that encapsulates specialized, bespoke validation rules. The infrastructure code doesn’t include any custom validator, but the example show how one can be implemented. The interface mandates an IsValid function that returns a Boolean (True when valid), and a user-friendly Message property that the ValidationManager uses to create tooltips.

'@Folder MVVM.Example

Option Explicit

Implements IValueValidator

Private Function IValueValidator_IsValid(ByVal Value As Variant, ByVal Source As Object, ByVal Target As Object) As Boolean

IValueValidator_IsValid = Len(Trim$(Value)) > 0

End Function

Private Property Get IValueValidator_Message() As String

IValueValidator_Message = "Value cannot be empty."

End Property

The IsValid method provides you with the Value being validated, the binding Source, and the binding Target objects, which means every validator has access to everything exposed by the ViewModel; note that the method being a Function strongly suggests that it should not have side-effects. Avoid mutating ViewModel properties in a validator, but the message can be constructed dynamically if the validator is made to hold module-level state… although I would really strive to avoid making custom validators stateful.

While the underlying data validation mechanics are relatively complex, believe it or not there is no other step needed to implement custom validation for your property bindings: IBindingManager.BindPropertyPath is happy to take in any validator object, as long as it implements the IValueValidator interface.

Presenting Validation Errors

Without taking any steps to format validation errors, commands that can only execute against a valid ViewModel will automatically get disabled, but the input field with the invalid value won’t give the user any clue. By providing an IValidationErrorFormatter implementation when registering the binding, you get to control whether hidden UI elements should be displayed when there’s a validation error.

The ValidationErrorFormatter class meets most simple scenarios. Use the factory method to create an instance with a specific target UI element, then chain builder method calls to configure the formatting inline with a nice, fluent syntax:

Set Formatter = ValidationErrorFormatter.Create(Me.PathBox) _

.WithErrorBackgroundColor(vbYellow) _

.WithErrorBorderColor

| Method | Purpose |

|---|---|

| Create | Factory method, ensures every instance is created with a target UI element. |

| WithErrorBackgroundColor | Makes the target have a different background color given a validation error. If no color is specified, a default “error background color” (light red) is used. |

| WithErrorBorderColor | Makes the target have a different border color given a validation error. If no color is specified, a default “error border color” (dark red) is used. Method has no effect if the UI control isn’t “flat style” or if the border style isn’t “fixed single”. |

| WithErrorForeColor | Makes the target have a different fore (text) color given a validation error. If no color is specified, a default “error border color” (dark red) is used. |

| WithErrorFontBold | Makes the target use a bold font weight given a validation error. Method has no effect if the UI element uses a bolded font face without a validation error. |

| WithTargetOnlyVisibleOnError | Makes the target UI element normally hidden, only to be made visible given a validation error. Particularly useful with aggregated formatters, to bind the visibility of a label and/or an icon control to the presence of a validation error. |

ValidationErrorFormatter class.The example code uses an AggregateErrorFormatter to tie multiple ValidationErrorFormatter instances (and thus possibly multiple different target UI controls) to the the same binding.

Value Converters

IBindingManager.BindPropertyPath can take an optional IValueConverter parameter when a conversion is needed between the source and the target, or between the target and the source. One useful value converter can be one like the InverseBooleanConverter implementation, which can be used in a binding where True in the source needs to bind to False in the target.

The interface mandates the presence of Convert and ConvertBack functions, respectively invoked when the binding value is going to the target and the source. Again, pure functions and performance-sensitive implementations should be preferred over side-effecting code.

'@Folder MVVM.Infrastructure.Bindings.Converters

'@ModuleDescription "A value converter that inverts a Boolean value."

'@PredeclaredId

'@Exposed

Option Explicit

Implements IValueConverter

Public Function Default() As IValueConverter

GuardClauses.GuardNonDefaultInstance Me, InverseBooleanConverter

Set Default = InverseBooleanConverter

End Function

Private Function IValueConverter_Convert(ByVal Value As Variant) As Variant

IValueConverter_Convert = Not CBool(Value)

End Function

Private Function IValueConverter_ConvertBack(ByVal Value As Variant) As Variant

IValueConverter_ConvertBack = Not CBool(Value)

End Function

Converters used in single-directional bindings don’t need to necessarily make both functions return a value that makes sense: sometimes a value can be converted to another but cannot round-trip back to the original, and that’s fine.

String Formatting

One aspect of property bindings I haven’t tackled yet, is the whole StringFormat deal. Once that is implemented and working, the string representation of the target control will be better separated from its actual value. And a sensible default format for some data types (Date, Currency) can even be inferred from the type of the source property!

Another thing string formatting would enable, is the ability to interpolate the value within a string. For example there could be a property binding defined like this:

.BindPropertyPath ViewModel, "NetAmount", Me.NetAmountBox, StringFormat:="USD$ {0:C2}"

And the NetAmountBox would read “USD$ 1,386.77” given the value 1386.77, and the binding would never get confused and would always know that the underlying value is a numeric value of 1386.77 and not a formatted string. Now, until that is done, string formatting probably needs to involve custom value converters. When string formatting works in property bindings, any converter will get invoked before: it’s always going to be the converted value that gets formatted.

ViewModel

Every ViewModel class is inherently application-specific and will look different, but there will be recurring themes:

- Every field in the View wants to bind to a ViewModel property, and then you’ll want extra properties for various other things, so the ViewModel quickly grows more properties than comfort allows. Make smaller “ViewModel” classes by regrouping related properties, and bind with a property path rather than a plain property name.

- Property changes need to propagate to the “main” ViewModel (the “data context”) somehow, so making all ViewModel classes fire a

PropertyChangedevent as appropriate is a good idea. Hold aWithEventsreference to the “child” ViewModel, and handle propagation by raising the “parent” ViewModel’s ownPropertyChangedevent, all the way up to the “main” ViewModel, where the handler nudges command bindings to evaluate whether commands can execute. One solution could be to register all command bindings with someCommandManagerobject that would have to implementIHandlePropertyChangedand would relieve the ViewModel of needing to do this.

Each ViewModel should implement at least two interfaces:

- IViewModel, because we need a way to access the validation error handler and this interface makes a good spot for it.

- INotifyPropertyChanged, to notify data bindings when a ViewModel property changes.

Here is the IViewModel implementation for the example code – the idea is really to expose properties for the view to bind, and we must not forget to notify handlers when a property value changes – notice the RHS-checking logic in the Property Let member:

'@Folder MVVM.Example

'@ModuleDescription "An example ViewModel implementation for some dialog."

'@PredeclaredId

Implements IViewModel

Implements INotifyPropertyChanged

Option Explicit

Public Event PropertyChanged(ByVal Source As Object, ByVal PropertyName As String)

Private Type TViewModel

'INotifyPropertyChanged state:

Handlers As Collection

'CommandBindings:

SomeCommand As ICommand

'Read/Write PropertyBindings:

SourcePath As String

SomeOption As Boolean

SomeOtherOption As Boolean

End Type

Private this As TViewModel

Private WithEvents ValidationHandler As ValidationManager

Public Function Create() As IViewModel

GuardClauses.GuardNonDefaultInstance Me, ExampleViewModel, TypeName(Me)

Dim result As ExampleViewModel

Set result = New ExampleViewModel

Set Create = result

End Function

Public Property Get Validation() As IHandleValidationError

Set Validation = ValidationHandler

End Property

Public Property Get SourcePath() As String

SourcePath = this.SourcePath

End Property

Public Property Let SourcePath(ByVal RHS As String)

If this.SourcePath <> RHS Then

this.SourcePath = RHS

OnPropertyChanged "SourcePath"

End If

End Property

Public Property Get SomeOption() As Boolean

SomeOption = this.SomeOption

End Property

Public Property Let SomeOption(ByVal RHS As Boolean)

If this.SomeOption <> RHS Then

this.SomeOption = RHS

OnPropertyChanged "SomeOption"

End If

End Property

Public Property Get SomeOtherOption() As Boolean

SomeOtherOption = this.SomeOtherOption

End Property

Public Property Let SomeOtherOption(ByVal RHS As Boolean)

If this.SomeOtherOption <> RHS Then

this.SomeOtherOption = RHS

OnPropertyChanged "SomeOtherOption"

End If

End Property

Public Property Get SomeCommand() As ICommand

Set SomeCommand = this.SomeCommand

End Property

Public Property Set SomeCommand(ByVal RHS As ICommand)

Set this.SomeCommand = RHS

End Property

Public Property Get SomeOptionName() As String

SomeOptionName = "Auto"

End Property

Public Property Get SomeOtherOptionName() As String

SomeOtherOptionName = "Manual/Browse"

End Property

Public Property Get Instructions() As String

Instructions = "Lorem ipsum dolor sit amet, consectetur adipiscing elit."

End Property

Private Sub OnPropertyChanged(ByVal PropertyName As String)

RaiseEvent PropertyChanged(Me, PropertyName)

Dim Handler As IHandlePropertyChanged

For Each Handler In this.Handlers

Handler.OnPropertyChanged Me, PropertyName

Next

End Sub

Private Sub Class_Initialize()

Set this.Handlers = New Collection

Set ValidationHandler = ValidationManager.Create

End Sub

Private Sub INotifyPropertyChanged_OnPropertyChanged(ByVal Source As Object, ByVal PropertyName As String)

OnPropertyChanged PropertyName

End Sub

Private Sub INotifyPropertyChanged_RegisterHandler(ByVal Handler As IHandlePropertyChanged)

this.Handlers.Add Handler

End Sub

Private Property Get IViewModel_Validation() As IHandleValidationError

Set IViewModel_Validation = ValidationHandler

End Property

Private Sub ValidationHandler_PropertyChanged(ByVal Source As Object, ByVal PropertyName As String)

OnPropertyChanged PropertyName

End Sub

Nothing much of interest here, other than the INotifyPropertyChanged implementation and the fact that a ViewModel is really just a fancy word for a class that exposes a bunch of properties that magically keep in sync with UI controls!

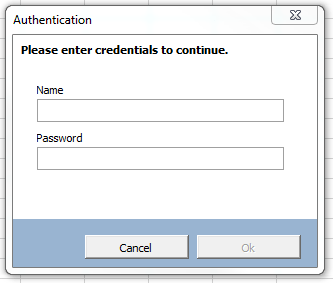

View

In a Smart UI, that module is, more often than not, a complete wreck. In Model-View-Presenter it quickly gets cluttered with many one-liner event handlers, and something just feels clunky about the MVP pattern. Now, I’m trying really hard, but I can’t think of a single reason to not want UserForm code-behind to look like this all the time… this is absolutely all of it, there’s no cheating going on:

'@Folder MVVM.Example

'@ModuleDescription "An example implementation of a View."

Implements IView

Implements ICancellable

Option Explicit

Private Type TView

'IView state:

ViewModel As ExampleViewModel

'ICancellable state:

IsCancelled As Boolean

'Data binding helper dependency:

Bindings As IBindingManager

End Type

Private this As TView

'@Description "A factory method to create new instances of this View, already wired-up to a ViewModel."

Public Function Create(ByVal ViewModel As ExampleViewModel, ByVal Bindings As IBindingManager) As IView

GuardClauses.GuardNonDefaultInstance Me, ExampleView, TypeName(Me)

GuardClauses.GuardNullReference ViewModel, TypeName(Me)

GuardClauses.GuardNullReference Bindings, TypeName(Me)

Dim result As ExampleView

Set result = New ExampleView

Set result.Bindings = Bindings

Set result.ViewModel = ViewModel

Set Create = result

End Function

Private Property Get IsDefaultInstance() As Boolean

IsDefaultInstance = Me Is ExampleView

End Property

'@Description "Gets/sets the ViewModel to use as a context for property and command bindings."

Public Property Get ViewModel() As ExampleViewModel

Set ViewModel = this.ViewModel

End Property

Public Property Set ViewModel(ByVal RHS As ExampleViewModel)

GuardClauses.GuardExpression IsDefaultInstance, TypeName(Me)

GuardClauses.GuardNullReference RHS

Set this.ViewModel = RHS

InitializeBindings

End Property

'@Description "Gets/sets the binding manager implementation."

Public Property Get Bindings() As IBindingManager

Set Bindings = this.Bindings

End Property

Public Property Set Bindings(ByVal RHS As IBindingManager)

GuardClauses.GuardExpression IsDefaultInstance, TypeName(Me)

GuardClauses.GuardDoubleInitialization this.Bindings, TypeName(Me)

GuardClauses.GuardNullReference RHS

Set this.Bindings = RHS

End Property

Private Sub BindViewModelCommands()

With Bindings

.BindCommand ViewModel, Me.OkButton, AcceptCommand.Create(Me)

.BindCommand ViewModel, Me.CancelButton, CancelCommand.Create(Me)

.BindCommand ViewModel, Me.BrowseButton, ViewModel.SomeCommand

'...

End With

End Sub

Private Sub BindViewModelProperties()

With Bindings

.BindPropertyPath ViewModel, "SourcePath", Me.PathBox, _

Validator:=New RequiredStringValidator, _

ErrorFormat:=AggregateErrorFormatter.Create(ViewModel, _

ValidationErrorFormatter.Create(Me.PathBox).WithErrorBackgroundColor.WithErrorBorderColor, _

ValidationErrorFormatter.Create(Me.InvalidPathIcon).WithTargetOnlyVisibleOnError("SourcePath"), _

ValidationErrorFormatter.Create(Me.ValidationMessage1).WithTargetOnlyVisibleOnError("SourcePath"))

.BindPropertyPath ViewModel, "Instructions", Me.InstructionsLabel

.BindPropertyPath ViewModel, "SomeOption", Me.OptionButton1

.BindPropertyPath ViewModel, "SomeOtherOption", Me.OptionButton2

.BindPropertyPath ViewModel, "SomeOptionName", Me.OptionButton1, "Caption", OneTimeBinding

.BindPropertyPath ViewModel, "SomeOtherOptionName", Me.OptionButton2, "Caption", OneTimeBinding

'...

End With

End Sub

Private Sub InitializeBindings()

If ViewModel Is Nothing Then Exit Sub

BindViewModelProperties

BindViewModelCommands

Bindings.ApplyBindings ViewModel

End Sub

Private Sub OnCancel()

this.IsCancelled = True

Me.Hide

End Sub

Private Property Get ICancellable_IsCancelled() As Boolean

ICancellable_IsCancelled = this.IsCancelled

End Property

Private Sub ICancellable_OnCancel()

OnCancel

End Sub

Private Sub IView_Hide()

Me.Hide

End Sub

Private Sub IView_Show()

Me.Show vbModal

End Sub

Private Function IView_ShowDialog() As Boolean

Me.Show vbModal

IView_ShowDialog = Not this.IsCancelled

End Function

Private Property Get IView_ViewModel() As Object

Set IView_ViewModel = this.ViewModel

End Property

Surely some tweaks will be made over the next couple of weeks as I put the UI design pattern to a more extensive workout with the Rubberduck website content maintenance app – but having used MVVM in C#/WPF for many years, I already know that this is how I want to be coding VBA user interfaces going forward.

I really love how the language has had the ability to make this pattern work, all along.

To be continued…